Have you ever wondered about the potential risks and challenges associated with the rapid advancement of artificial intelligence (AI)? In this article, we explore various strategies and considerations on how to address the escalating concerns surrounding AI development. From ethics and regulations to the importance of human oversight, we delve into the possibilities of harnessing the power of AI while ensuring its responsible and safe usage within our society. So, let’s embark on this captivating journey together and discover how we can navigate through the complexities of AI and prevent any unintended consequences that may arise. After all, it’s in our hands to shape the future of AI for the better.

Understanding Artificial Intelligence

Artificial Intelligence (AI) has become a prominent topic in today’s technological landscape. At its core, AI refers to the ability of machines to mimic and perform tasks that would typically require human intelligence. This encompasses a wide range of capabilities, from problem-solving and decision-making to natural language processing and pattern recognition. By understanding the various aspects of AI, we can better appreciate its potential and implications.

Defining Artificial Intelligence

Artificial Intelligence can be broadly defined as the theory and development of computer systems that possess the ability to perform tasks that would typically require human intelligence. These tasks can range from simple and repetitive actions to complex problem-solving and decision-making processes. AI systems achieve this by utilizing algorithms, data, and machine learning techniques to analyze information, recognize patterns, and make informed predictions or recommendations.

Different Types of AI

Artificial Intelligence can be categorized into different types based on their capabilities and functionalities. These include narrow or weak AI, general or strong AI, and superintelligent AI. Narrow AI refers to systems designed to perform specific tasks, such as virtual assistants, image recognition, or recommendation algorithms. On the other hand, general AI aims to display human-level intelligence across various domains and engage in a broad range of activities. Lastly, superintelligent AI surpasses human intelligence and possesses abilities that are currently beyond our comprehension.

Importance of AI

The importance of AI lies in its potential to revolutionize various industries and improve human lives. AI technologies are already being applied in fields such as healthcare, finance, transportation, and manufacturing, contributing to increased efficiency, accuracy, and productivity. AI-powered systems can assist in diagnosing diseases, analyzing complex datasets, optimizing resource allocation, and enhancing customer experiences. Moreover, AI has the potential to address some of the world’s most pressing challenges, including climate change, poverty, and inequality, by providing innovative solutions and insights.

Ethical Concerns with Artificial Intelligence

While the development and proliferation of AI bring about numerous benefits, there are also ethical concerns that must not be overlooked. As AI becomes more advanced and integrated into society, it is crucial to address these concerns to ensure the responsible and ethical deployment of AI systems.

Job Automation and Unemployment

One of the primary ethical concerns with AI is the potential impact on jobs and employment. As AI systems automate tasks that were previously performed by humans, there is a concern that widespread adoption of AI could lead to job displacement and increased unemployment rates. It is crucial to find a balance between automation and preserving employment opportunities by identifying areas where humans can complement AI technologies.

Loss of Human Creativity and Decision-making

Another ethical concern is the possible loss of human creativity and decision-making as AI systems become increasingly capable of performing complex tasks. It is essential to recognize and value the unique qualities that humans bring to the table, such as empathy, intuition, and ethical judgment. By striking a balance between human and AI collaboration, we can ensure that decision-making processes incorporate both rationality and human values.

Bias and Discrimination

AI systems learn from the data they are trained on, making them vulnerable to biases present in the datasets. This poses a significant ethical concern as biased AI algorithms can perpetuate discrimination and reinforce societal inequalities. It is imperative for AI developers and stakeholders to actively address and mitigate biases in AI training data and algorithms to ensure fairness and equity in AI applications.

Privacy and Data Security

With AI systems requiring vast amounts of data to learn and make informed decisions, privacy and data security become critical ethical considerations. The collection, storage, and usage of personal data must adhere to strict ethical and legal standards to protect individuals’ privacy and prevent potential abuses. Robust cybersecurity measures must also be implemented to safeguard AI systems against malicious attacks and unauthorized access.

Regulatory Measures

To ensure the responsible development and deployment of AI, regulatory measures need to be in place. Government oversight, the establishment of AI ethics committees, and licensing and certification programs for AI developers can help navigate the complexities and potential risks associated with AI technologies.

Government Oversight and Regulation

Strong government oversight and regulation are essential to ensure the ethical and responsible development, deployment, and use of AI systems. Governments should collaborate with experts, industry leaders, and relevant stakeholders to establish regulatory frameworks and guidelines that address the ethical concerns and potential risks associated with AI. These regulations should cover aspects such as data privacy, bias mitigation, accountability, and transparency.

Creation of AI Ethics Committees

The creation of AI ethics committees can provide valuable guidance and promote accountability in AI development and deployment. These committees should consist of multidisciplinary experts, including ethicists, technologists, legal professionals, and representatives from impacted communities. They can work together to establish ethical guidelines, review AI applications, and ensure that AI technologies are aligned with societal values and interests.

Licensing and Certification for AI Developers

Implementing licensing and certification programs for AI developers can help ensure that those involved in AI development possess the necessary skills, knowledge, and ethical understanding to create responsible and trustworthy AI systems. These programs can include educational requirements, adherence to ethical standards, and ongoing professional development to keep up with evolving AI technologies and ethical considerations.

Limiting AI Development

While the benefits of AI are significant, it is crucial to consider certain limitations and potential risks associated with its development. By implementing measures such as financial constraints on AI research, restricting access to advanced AI technologies, and enforcing moratoriums on certain AI applications, we can mitigate potential risks and protect societal well-being.

Financial Constraints on AI Research

Introducing financial constraints on AI research can help prevent the potential misuse or development of AI technologies without proper consideration of ethical implications. This can be achieved through government funding restrictions, regulations on private sector investments, or ethical review processes for AI research proposals. By ensuring the allocation of resources to responsible and ethical AI research, we can prioritize the development of beneficial AI systems.

Restricting Access to Advanced AI Technologies

Strategic restrictions on access to advanced AI technologies can help prevent the misuse or unauthorized use of powerful AI systems. Governments can establish oversight mechanisms and licensing requirements for the development and deployment of advanced AI technologies to limit access to actors who possess the necessary expertise and adhere to ethical guidelines. This approach ensures that AI is used responsibly and in the best interest of society.

Moratorium on Certain AI Applications

In cases where the potential risks and ethical concerns associated with specific AI applications outweigh the benefits, imposing temporary moratoriums can be a viable option. This allows for further research, public consultation, and the development of appropriate regulations before the deployment of potentially harmful AI systems. By taking a precautionary approach, we can ensure the safety and ethical implications of AI applications.

Transparency and Accountability

Transparency and accountability are crucial aspects of AI development and deployment. By promoting algorithmic transparency, explainable AI, and independent auditing of AI systems, we can foster trust, ensure fairness, and hold AI technologies accountable for their actions.

Algorithmic Transparency

Algorithmic transparency refers to the openness and understanding of AI algorithms and processes. By making the inner workings of AI systems transparent and accessible, developers can address potential biases, errors, or unintended consequences. Transparency allows for external scrutiny, encourages dialogue, and facilitates the identification and correction of ethical shortcomings.

Explainable AI

Explainable AI aims to provide insights into how AI systems arrive at their decisions and predictions. This helps users understand the reasoning behind AI outputs and enables them to assess the fairness, accuracy, and ethical implications of these outcomes. By prioritizing explainability, we can ensure that AI systems are accountable and avoid the creation of “black box” technologies.

Independent Auditing of AI Systems

Independent auditing of AI systems can provide an external assessment of their ethics, fairness, and compliance with established regulations. Auditing processes can involve the review of AI algorithms, datasets, and decision-making processes to identify biases, potential harms, or ethical violations. Independent audits contribute to the transparency and accountability of AI technologies, inspiring confidence in their responsible use.

Collaboration and International Cooperation

Given the global nature of AI development and deployment, collaboration and international cooperation are essential for formulating cohesive and effective ethical frameworks. By establishing international AI regulations, sharing best practices and knowledge, and promoting ethical AI standards, we can ensure that AI technologies are deployed responsibly and harmoniously across borders.

Establishing International AI Regulations

International collaboration is crucial in establishing consistent and cohesive ethical frameworks for AI. Governments, intergovernmental organizations, and other stakeholders can come together to develop international agreements, standards, and guidelines that address the ethical concerns and potential risks associated with AI. This collaboration ensures that AI technologies are developed and used in a manner that respects human rights, fundamental freedoms, and societal values.

Sharing Best Practices and Knowledge across Nations

Sharing best practices, experiences, and knowledge is pivotal in fostering responsible AI development and deployment. Countries and organizations can engage in open dialogues, conferences, and collaborative initiatives to exchange insights and lessons learned. This exchange enables the identification and replication of successful ethical practices and strategies, promoting consistency and improving the ethical landscape of AI globally.

Promoting Ethical AI Standards

Promoting ethical AI standards helps set clear expectations and benchmarks for AI developers and users. International collaborations can lead to the establishment of ethical guidelines, principles, and certification programs that ensure the responsible and ethical deployment of AI technologies. By upholding and promoting these standards, we can create a global AI ecosystem that prioritizes human well-being, fairness, and transparency.

Promoting Human-Centric AI

To ensure that AI serves humans’ best interests, it is essential to design AI systems with human values in mind, incorporate ethical principles into AI development, and foster collaboration between humans and AI technologies.

Designing AI with Human Values in Mind

Designing AI systems with human values in mind ensures that these systems align with societal goals and aspirations. Human-centered design processes involve engaging diverse stakeholders, considering ethical implications, and mitigating potential harms. By prioritizing human values, AI can be developed and deployed to enhance human well-being, address societal challenges, and promote positive social outcomes.

Incorporating Ethical Principles into AI Development

Ethical principles should be integrated into the development and deployment of AI technologies. These principles can include fairness, transparency, accountability, privacy, and respect for human rights. By emphasizing ethical considerations throughout AI development, we can build systems that are sensitive to social and cultural contexts, avoid discriminatory outcomes, and uphold fundamental ethical standards.

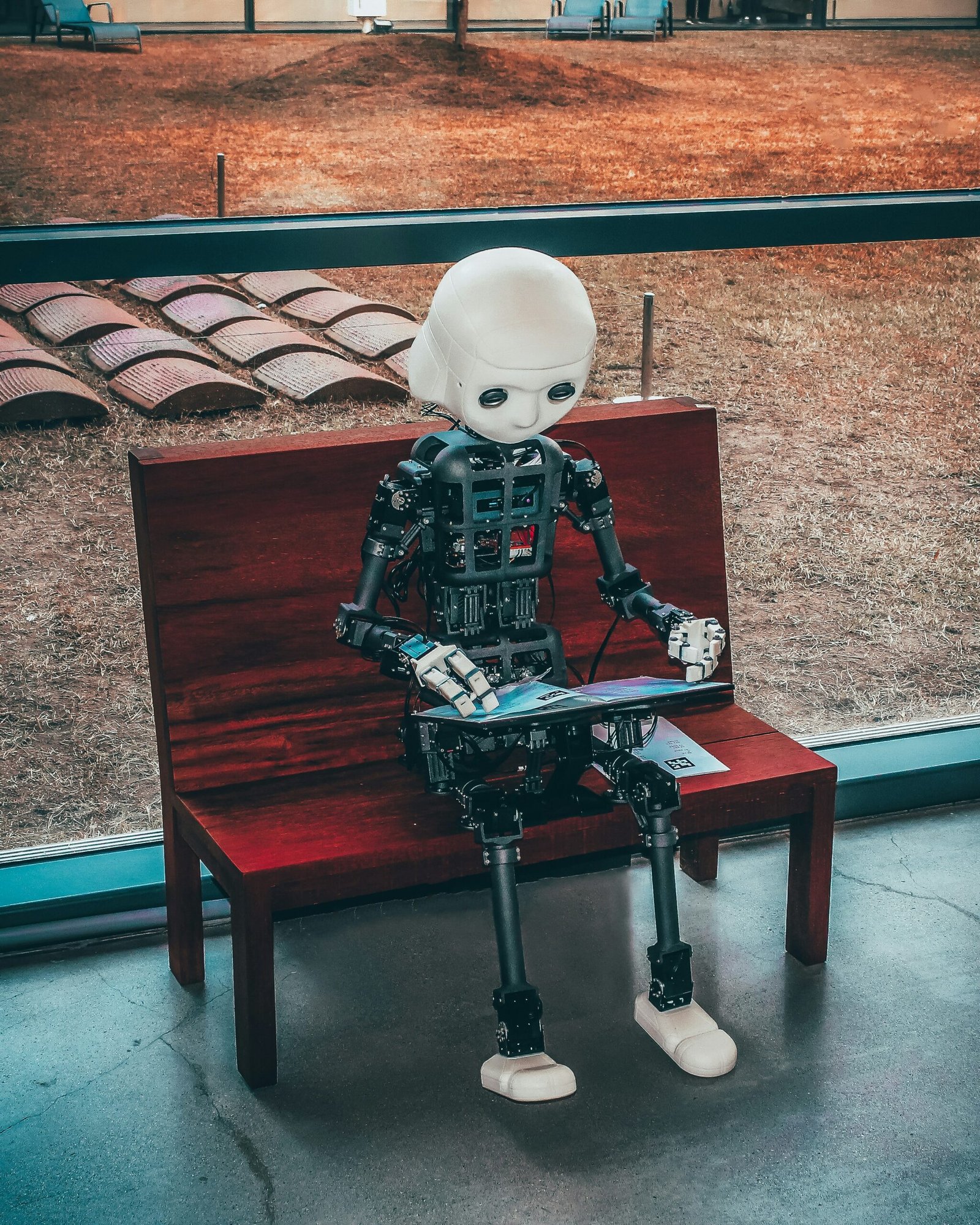

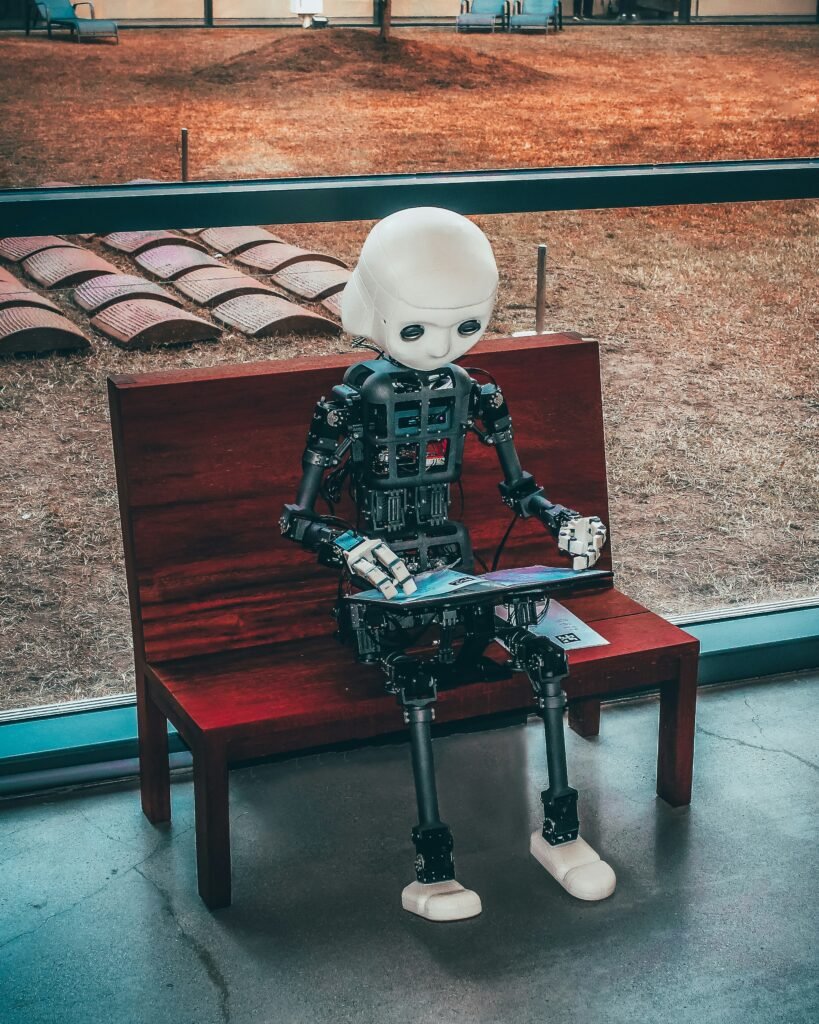

Fostering Human-AI Collaboration

Rather than replacing humans, AI should be seen as a tool to augment human capabilities, fostering collaboration between humans and AI technologies. This human-AI collaboration enables humans to leverage the strengths of AI, such as data processing and pattern recognition, while maintaining autonomy, creativity, and decision-making abilities. By emphasizing collaboration, we can create AI systems that empower humans and complement their unique qualities.

Education and Awareness

As AI continues to shape our society, promoting education and awareness about AI is crucial. By raising public awareness, promoting AI literacy, and providing comprehensive AI education and training, we can ensure that individuals have the knowledge and skills to navigate the ethical implications and understand the potential benefits of AI technologies.

Raising Public Awareness about AI

Raising public awareness about AI involves disseminating information about AI technologies, their potential applications, and their ethical implications. This can be achieved through public campaigns, educational programs, and community engagement initiatives. By providing accessible and accurate information, we can empower individuals to make informed decisions, engage in dialogue, and actively participate in shaping the ethical landscape of AI.

Promoting AI Literacy

Promoting AI literacy entails equipping individuals with the knowledge and understanding necessary to comprehend the basic principles, concepts, and terminology of AI. Incorporating AI education into school curricula, offering AI courses and workshops, and providing training opportunities for professionals in various industries are effective ways to promote AI literacy. By enhancing AI literacy, we can ensure that individuals are equipped to navigate the ethical considerations and responsibly use AI technologies.

Providing Comprehensive AI Education and Training

Comprehensive AI education and training involve equipping individuals with the technical skills, ethical understanding, and critical thinking abilities necessary for AI-related careers and decision-making. This can include AI-specific training programs, courses on ethical AI design and deployment, and interdisciplinary AI education that combines technical knowledge with ethical considerations. By providing comprehensive education and training, we can cultivate a workforce adept at addressing AI’s potential risks and harnessing its benefits responsibly.

Ensuring Safety and Security

Ensuring the safety and security of AI technologies is paramount. By implementing robust testing and validation processes, implementing safety protocols for AI systems, and addressing cybersecurity risks, we can minimize the potential harms and risks associated with AI deployment.

Robust Testing and Validation of AI Systems

Robust testing and validation processes are essential to ensure the reliability, accuracy, and safety of AI systems. Rigorous testing methodologies, including stress testing, edge-case scenarios, and adversarial testing, help identify and rectify potential issues and vulnerabilities. Regular validation and evaluation procedures are also crucial to keep AI systems up to date, responsive to changing environments, and accountable for their performance.

Implementing Safety Protocols for AI

Implementing safety protocols for AI systems involves incorporating fail-safe mechanisms, contingencies, and mitigating strategies to minimize potential risks and harms. These protocols can include mechanisms for error detection and correction, graceful degradation, and failover mechanisms. By prioritizing safety, we can develop AI systems that mitigate risks and minimize unintended consequences.

Addressing Cybersecurity Risks in AI

Cybersecurity risks pose a significant threat to the deployment and use of AI technologies. AI systems can be vulnerable to attacks, data breaches, and malicious exploitation. Addressing cybersecurity risks entails implementing robust security measures, encryption protocols, and access controls to protect AI systems and the data they handle. By fortifying the security of AI technologies, we can prevent potential breaches and ensure the responsible and secure use of AI.

Continuous Monitoring and Evaluation

Continuous monitoring and evaluation are vital for assessing the impacts of AI on society, identifying areas for improvement, and iterating on ethical AI policies. By monitoring AI development and deployment, evaluating societal impacts, and iterating on ethical frameworks, we can adapt to evolving challenges and ensure responsible AI practices.

Monitoring AI Development and Deployment

Continuously monitoring AI development and deployment allows for the identification of emerging risks, unethical practices, or unintended consequences. Monitoring can involve the analysis of AI systems, assessment of data sources, and tracking societal outcomes. By proactively monitoring AI technologies, we can address potential issues promptly and adapt ethical guidelines accordingly.

Evaluating the Impacts of AI on Society

Evaluating the impacts of AI on society entails assessing the social, economic, and ethical consequences of AI deployment. By analyzing the effects of AI on employment, privacy, discrimination, and various sectors, we can identify areas for improvement, potential harms, and unintended consequences. Evaluating these impacts enables policymakers, researchers, and stakeholders to make informed decisions based on evidence and take corrective measures as necessary.

Iterative Improvement of Ethical AI Policies

Ethical AI policies should be subject to iteration and improvement based on real-world experiences and evolving challenges. By incorporating feedback from various stakeholders, conducting research, and monitoring societal impacts, ethical frameworks can be updated to address emerging concerns, advancements in AI technologies, and changing societal needs. An iterative approach to ethical AI policies ensures their relevance, adaptability, and effectiveness in guiding responsible AI development and deployment.

In conclusion, understanding artificial intelligence requires an exploration of its definition, types, and significance. However, ethical concerns related to job automation, loss of human decision-making, bias, and privacy must also be examined. Regulatory measures, transparency, collaboration, and education play pivotal roles in guiding the ethical development and deployment of AI technologies. By incorporating human values, ensuring safety, and continuously monitoring and evaluating AI systems, we can navigate the complex landscape of AI ethically and responsibly.