Join us on an exciting journey as we explore the long and fascinating history of AI, from its early beginnings in the 1900s to the present day. From the concept of artificial humans and robots dating back thousands of years, to the coining of the term “artificial intelligence” in the 1950s, AI has experienced periods of rapid growth, struggle, and innovation. We’ll delve into the breakthroughs of the 1980s that led to an “AI boom,” the subsequent decline known as the “AI winter,” and the significant advancements in areas like chess-playing programs, speech recognition, and robotics that have shaped our present reality. Discover how AI has become an integral part of our everyday lives, and get ready to envision the exciting potential that the future holds for this captivating field.

Early Concepts of AI

Artificial humans and robots in ancient history

In ancient history, there were intriguing concepts and depictions of artificial humans and robots. The idea of creating synthetic beings dates back thousands of years, with early civilizations imagining creatures made of clay or metal that could mimic human behavior. One notable example is the story of the golem in Jewish folklore, where a creature is brought to life using mystical means.

Leonardo da Vinci and his mechanical inventions

During the Renaissance, Leonardo da Vinci, known for his artistic genius, also dabbled in engineering and mechanics. He sketched out designs for humanoid robots and mechanical devices that showcased his visionary thinking. One of his most famous inventions is the mechanical knight, a humanoid robot that could move its arms, legs, and even its head.

The Mechanical Turk hoax

In the late 18th century, an invention known as the Mechanical Turk captivated audiences with its chess-playing abilities. It was a supposedly automated machine that played chess against human opponents and often defeated them. However, it was later revealed that the Mechanical Turk was actually operated by a person hidden inside the contraption, giving the illusion of artificial intelligence. This hoax highlighted society’s fascination with the concept of AI and the desire for machines that could mimic human intelligence.

The Rise of AI

Alan Turing’s influence on AI

In the early 20th century, Alan Turing, an eminent mathematician and computer scientist, made significant contributions to the field of AI. Turing proposed the idea of a universal machine capable of solving any computational problem and introduced the concept of a “Turing test” to evaluate machine intelligence. His work laid the foundation for the development of AI and sparked further research in the field.

The birth of information theory

In the 1940s, information theory emerged as a field of study, thanks to the efforts of Claude Shannon. Shannon’s groundbreaking work on how information is transmitted and processed provided a mathematical framework for understanding data and communication. This theory played a crucial role in the development of AI by providing a basis for modeling and analyzing intelligent systems.

Early AI research in the 1950s

The 1950s marked a turning point in the field of AI, with notable research breakthroughs and the establishment of AI as a distinct discipline. Researchers such as John McCarthy, Marvin Minsky, and Allen Newell made significant contributions to this nascent field. They explored topics such as problem-solving, logical reasoning, and symbolic representation, paving the way for further advancements in AI research.

Coining the term ‘artificial intelligence’

In 1956, the term “artificial intelligence” was officially coined during the Dartmouth Conference. John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon came together to discuss the possibility of creating machines that could exhibit human-like intelligence. The term AI quickly gained traction and became the defining label for this emerging field of study.

Periods of Growth and Struggle

The AI winter of skepticism

While AI research was met with enthusiasm and optimism, the field also experienced periods of skepticism and disappointment. In the 1960s and 1970s, AI research faced significant challenges, which led to a decline in interest and funding. This period, known as the “AI winter,” was characterized by unrealized expectations and a lack of progress in developing truly intelligent machines.

The rise of expert systems

Despite the setbacks, AI research in the 1970s saw the rise of expert systems. These systems utilized knowledge bases and rules to mimic human expertise in specific domains. Expert systems were widely applied in areas such as healthcare diagnostics and business decision-making. They demonstrated the potential of AI to assist humans in complex problem-solving tasks.

Limitations and disappointments in the 1970s

The 1970s also brought to light some of the limitations and challenges of early AI systems. Many AI researchers encountered difficulties in dealing with real-world complexity and uncertainty. The lack of computational power and adequate algorithms hindered the progress of AI, leading to a sense of disappointment and tempered expectations.

AI Boom and Increased Funding

Breakthroughs in the 1980s

In the 1980s, AI research experienced a resurgence with significant breakthroughs in various subfields. The development of expert systems, advanced algorithms, and improved computing power propelled AI forward. Researchers made notable progress in natural language processing, pattern recognition, and robotic control, among other areas.

Increased commercial interest in AI

During the 1980s, there was a notable increase in commercial interest and investment in AI technology. Companies recognized the potential of AI to revolutionize industries and sought to capitalize on this emerging trend. AI startups and research laboratories flourished, further driving innovation and applying AI techniques to practical problems.

Government funding for AI research

As the potential of AI became more evident, governments around the world started providing substantial funding for AI research. They recognized the strategic importance of AI and its impact on various sectors. This increased funding enabled researchers to pursue ambitious projects and advance the state of the art in AI.

Applications in industries like healthcare and finance

The 1980s also witnessed the integration of AI technologies into industries such as healthcare and finance. Expert systems were deployed to assist doctors in diagnosing diseases and recommending treatments. In the financial sector, AI algorithms were used for analyzing market trends and making predictions. These applications showcased the practical benefits of AI in diverse domains.

The Decline of Interest and Funding

The AI winter of the late 1980s

Despite the progress made in the 1980s, the field of AI faced another decline in interest and funding during the late 1980s and early 1990s. High expectations and overpromising of AI capabilities contributed to a sense of disillusionment when the practical applications fell short. This resulted in reduced funding and a shift in focus to other emerging technologies.

Impacts of funding cuts and skepticism

The decline in funding had a significant impact on AI research during this period. Many AI projects were abandoned due to a lack of resources and support. Researchers faced challenges in securing funding for their work, which hindered their ability to push the boundaries of AI. Skepticism and disillusionment led to a general slowdown in the development of AI technologies.

Shifts in research focus to other fields

With the decline of interest and funding, some researchers shifted their focus to other fields, such as machine learning and data mining. These areas offered new opportunities for progress and showed promise in achieving intelligent behavior through statistical analysis and pattern recognition. This shift paved the way for advancements in AI research in the following decades.

Advancements in AI Research

Chess-playing programs and Deep Blue

In the late 1990s, AI made headlines with the remarkable progress in chess-playing programs. IBM’s Deep Blue defeated the world chess champion, Garry Kasparov, in a highly publicized match. This achievement showcased the power of AI techniques and demonstrated how machines could surpass human capabilities in specific domains.

Speech recognition technology

Another area of significant advancement in AI research is speech recognition technology. The ability for machines to understand and interpret human speech has improved dramatically over the years. This progress has enabled the development of virtual assistants like Siri and Alexa, which can carry out spoken commands and provide information.

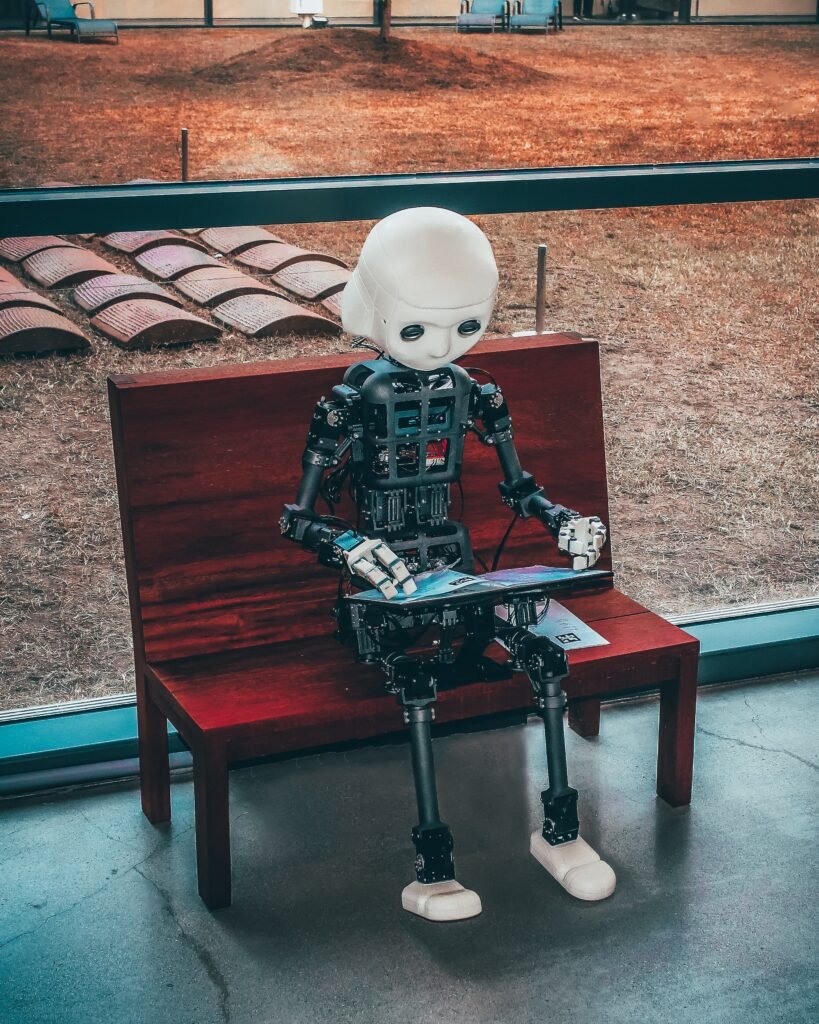

Robotics and automation

Advancements in robotics have also transformed the field of AI. From industrial automation to humanoid robots, AI-powered machines are capable of performing complex physical tasks. Robots can now navigate challenging environments, interact with humans, and even learn from their experiences. These developments have opened up new possibilities in areas such as manufacturing, healthcare, and exploration.

Machine learning and neural networks

Machine learning, a subset of AI, has seen tremendous growth in recent years. Neural networks, inspired by the structure of the human brain, have become a fundamental tool in machine learning algorithms. This approach has revolutionized various applications, including image and speech recognition, natural language processing, and recommendation systems.

AI in Everyday Life

Virtual assistants like Siri and Alexa

One of the most visible examples of AI in everyday life is the proliferation of virtual assistants like Siri and Alexa. These intelligent voice-activated systems can answer questions, play music, set reminders, and control smart devices. They use AI algorithms to understand and respond to natural language, making them increasingly reliable and user-friendly.

Social media algorithms and recommendation systems

AI algorithms play a major role in shaping the content we see on social media platforms. Recommendation systems analyze user preferences and behavior to suggest relevant content, products, and services. These algorithms have become vital for personalized user experiences and have transformed the way we consume information and engage with online platforms.

AI in healthcare and self-driving cars

In the healthcare industry, AI has made significant contributions to diagnosis, treatment planning, and patient care. Machine learning algorithms can analyze vast amounts of medical data to detect patterns and predict outcomes. Additionally, AI is paving the way for self-driving cars, with advanced sensors, computer vision, and deep learning algorithms enabling vehicles to navigate autonomously.

Ethical concerns and debates about AI

As AI becomes more prevalent, ethical concerns and debates surrounding its use have emerged. Questions about privacy, bias, and job displacement have prompted discussions on how to regulate and govern AI technologies. Ensuring ethical AI development and mitigating potential risks are key challenges that society must address as AI continues to advance.

The Future of AI

Advancements in deep learning

Deep learning, a subset of machine learning, holds much promise for the future of AI. By leveraging neural networks with multiple layers, deep learning algorithms can extract complex patterns and representations from large datasets. This approach has revolutionized areas such as computer vision, natural language processing, and autonomous systems.

Artificial general intelligence (AGI) and its challenges

Artificial general intelligence, or AGI, refers to a level of AI capable of performing any intellectual task that a human being can do. Achieving AGI remains a significant challenge due to the complexity and diversity of human intelligence. Researchers must address issues of common sense reasoning, understanding context, and ethical decision-making to progress towards true human-level AI.

AI’s potential in various industries

The potential of AI extends to various industries and sectors. In healthcare, AI can improve patient outcomes, assist in diagnosis, and streamline administrative tasks. In finance, AI algorithms can detect fraud, optimize investments, and improve risk assessment. Fields such as agriculture, transportation, and energy can benefit from AI-driven solutions for increased efficiency and sustainability.

Impact of AI on the job market

The rise of AI has raised concerns about its impact on the job market. While AI automation may lead to the replacement of certain tasks and job roles, it also has the potential to create new opportunities. Human-AI collaboration and the need for AI-specific skill sets are likely to shape the future of work, requiring individuals to adapt and acquire new skills to thrive in the evolving job market.

In conclusion, AI has come a long way since its early concepts and has now become an integral part of our daily lives. From ancient depictions of artificial humans to societal debates about ethics, AI has continually evolved and faced both advancements and challenges. The future of AI holds immense potential, with advancements in deep learning, the quest for AGI, and its impact on various industries and the job market. As AI continues to develop, it will undoubtedly reshape how we live, work, and interact with the world around us.