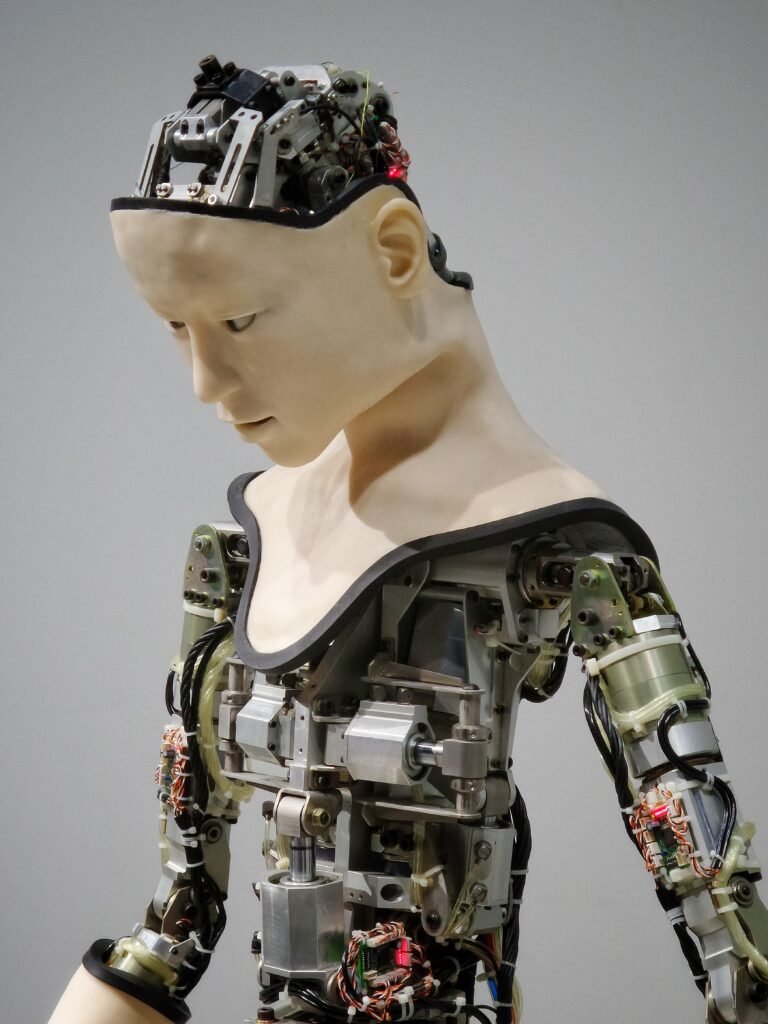

Imagine a world where machines can perform tasks that were once exclusive to human intelligence. Sounds impressive, right? However, as technology advances, concerns arise about the potential negative consequences of artificial intelligence (AI). In this thought-provoking article, we will explore the reasons why AI, despite its impressive capabilities, has the potential to be detrimental to society. From ethical dilemmas to job displacement, brace yourself for a deep dive into the darker side of artificial intelligence.

Negative impact on employment

Automation of jobs

Artificial Intelligence (AI) has facilitated the automation of various tasks and processes, leading to the displacement of many jobs previously held by human workers. AI-powered machines can perform tasks with greater efficiency and accuracy, often at a lower cost than human labor. This automation trend has the potential to significantly reduce the need for human workers in industries such as manufacturing, transportation, and customer service. While efficiency gains can be beneficial for businesses, the widespread adoption of AI automation can result in job losses and potential unemployment for many individuals.

Job displacement

The increasing role of AI in the workforce poses a significant challenge to job security for millions of workers worldwide. As AI technologies advance, more jobs are at risk of being replaced by machines. From factory workers to accountants, numerous professions face the possibility of automation. Without proper retraining programs or opportunities to transition into new industries, individuals whose jobs are taken over by AI-powered systems may struggle to find alternative employment, exacerbating the issue of unemployment.

Inequality in job opportunities

The automation of jobs driven by AI could potentially widen the existing inequality gap in job opportunities. As AI becomes increasingly integrated into various sectors, individuals with the necessary skills and knowledge to work alongside AI systems will have a greater advantage in securing employment. However, individuals without access to quality education or the ability to gain AI-related skills may be left behind, further exacerbating social and economic inequalities. This disparity in job opportunities could lead to a more divided society where only a select few benefit from the advancements of AI.

Lack of new job creation

Another concern in relation to the negative impact of AI on employment is the lack of new job creation. While AI technology may lead to job displacement in some areas, it also has the potential to create new job opportunities in other fields. However, the rate at which AI-related job opportunities are being created may not be sufficient to offset the job losses resulting from automation. This imbalance could lead to a significant mismatch between the skills needed in the job market and the skills possessed by workers, potentially resulting in prolonged unemployment and underutilization of human potential.

Ethical concerns

Privacy invasion

AI systems are often designed to collect and analyze vast amounts of personal data, raising concerns about privacy invasion. From targeted advertising algorithms to facial recognition technologies, AI has the potential to gather and exploit personal information without individuals’ explicit consent. This invasion of privacy not only violates personal boundaries but also raises questions about the ethical use and management of personal data in an increasingly AI-driven world.

Data security risks

As AI systems rely heavily on data, the risk of data breaches and security vulnerabilities becomes a pressing concern. The vast quantity of data collected and stored by AI systems presents an attractive target for cybercriminals. If these systems are compromised, sensitive information such as personal identifiable information or financial data could be exposed, leading to potential identity theft or other harmful consequences. Ensuring robust data security measures is therefore crucial to prevent the misuse or unauthorized access of personal information.

Lack of accountability

One of the ethical challenges associated with AI relates to the lack of accountability in decision-making. While AI systems can process large amounts of data and make recommendations or decisions based on that data, they lack the ability to explain their reasoning or justify their actions in a way that humans can understand. This lack of transparency raises concerns about the potential for biased or unfair decision-making without individuals being aware of the reasoning behind it, making it difficult to hold AI systems accountable for their actions.

Bias and discrimination

AI systems are not immune to bias and discrimination. When trained on biased datasets or programmed with flawed algorithms, AI systems can perpetuate and amplify existing biases in society. This bias can lead to discriminatory outcomes in areas such as hiring processes, loan approvals, or criminal justice systems. The potential for AI to unintentionally replicate and perpetuate societal biases underscores the need for thorough scrutiny and testing to ensure fairness and equality in AI applications.

Threat to human existence

Potential superintelligence

One of the most significant concerns regarding AI is the potential development of superintelligent AI systems that surpass human capabilities and understanding. While current AI technology is limited in its ability to reason and comprehend complex situations, there is a growing concern that future iterations of AI could become exponentially more intelligent. This potential superintelligence raises questions about the control and influence humans will have over these AI systems, and whether they could pose a threat to human existence if they were to surpass human control.

Uncontrolled decision-making

Related to the threat of superintelligence, the ability of AI systems to make decisions autonomously without human oversight raises concerns about the potential for uncontrolled decision-making. As AI technology advances, there is a risk that AI systems may make decisions that humans cannot comprehend or control. If these decisions have significant impacts on society, such as in critical infrastructure or financial systems, the lack of human control and understanding could have severe consequences.

Weaponization of AI

The development and deployment of AI in military applications raise concerns about the weaponization of AI technology. AI-powered autonomous weapons systems could potentially be used in warfare, leading to unprecedented levels of destruction and loss of life. The lack of human involvement in the decision-making process within such systems raises ethical questions about accountability and the potential for indiscriminate use of force. Addressing the ethical implications of AI in military contexts is crucial to ensure the responsible and ethical use of AI technology.

Loss of human control

The increasing reliance on AI systems and the potential for autonomous decision-making raise concerns about the loss of human control over critical aspects of society. If AI systems make decisions or control essential systems without human oversight, it becomes challenging for individuals to determine, influence, or correct these actions. The loss of human control could undermine democratic processes and societal values, creating a potential power imbalance between humans and AI systems.

Social and psychological impact

Dependence on AI

The growing dependence on AI systems and technologies can have profound social and psychological impacts on individuals and society as a whole. As AI becomes integrated into various aspects of daily life, from personal assistants to smart home devices, individuals may develop a reliance on AI for decision-making, problem-solving, and even basic tasks. This dependence on AI could lead to a reduced sense of self-efficacy and self-reliance, potentially diminishing individuals’ abilities to think critically and solve problems independently.

Isolation and loneliness

The increasing use of AI-powered technologies in social interactions, such as chatbots or virtual assistants, may contribute to feelings of isolation and loneliness. While these technologies aim to simulate human-like interactions, they cannot fully replace genuine human connections. Relying heavily on AI for social interactions may lead to a decrease in meaningful human relationships and a sense of detachment from the real world, ultimately impacting individuals’ mental well-being and emotional fulfillment.

Degradation of personal skills

As AI systems take over more tasks previously carried out by humans, there is a risk of the degradation of personal skills and capabilities. Skills that were once essential for certain jobs may become obsolete or diminished due to AI automation. For example, the reliance on AI for calculations and analysis may erode individuals’ mathematical abilities over time. This degradation of personal skills not only limits individuals’ potential but also decreases the overall human capacity to think critically and adapt to new challenges.

Unemployment-related stress

The negative impact of AI on employment and the potential for increased job insecurity can lead to heightened levels of stress and anxiety among individuals. Losing a job due to automation or struggling to find new employment in an AI-dominated job market can have severe psychological and emotional consequences. The fear of unemployment, financial instability, and the inability to support oneself or one’s family can significantly impact individuals’ mental health and overall well-being, creating a significant social and psychological burden.

Economic challenges

Wealth concentration

The rise of AI technology has the potential to exacerbate the issue of wealth concentration. As AI automation replaces human workers in various industries, the profits generated from increased productivity might accrue primarily to the owners of AI systems and large corporations. This concentration of wealth could further widen the economic gap between the rich and the poor, perpetuating social inequalities and hindering economic mobility for disadvantaged individuals.

Increase in income inequality

The displacement of certain jobs and the concentration of wealth in the hands of a few have the potential to increase income inequality. Those who possess the skills needed to work alongside AI systems or who own AI-driven businesses may experience significant income gains, while others who lack these skills or work in industries susceptible to AI automation may struggle to maintain a decent standard of living. This growing income inequality can lead to social unrest, political instability, and hinder overall economic growth and prosperity.

Displacement of certain industries

The adoption of AI technology could lead to the displacement of entire industries. Jobs that are routine-based or easily automated may be at the highest risk of being replaced by AI systems. For example, self-driving vehicles may disrupt the transportation industry, causing severe job losses among truck drivers and taxi drivers. The displacement of entire industries not only affects the individuals employed in those sectors but also creates significant economic challenges for entire communities and regions that rely on those industries for their livelihoods.

Growing reliance on algorithms

The increasing reliance on algorithms and AI systems to make important economic decisions poses economic challenges. Key decisions related to investments, resource allocation, or market predictions are increasingly influenced or even determined by algorithms. The opacity of these algorithms and their potential biases can undermine trust and present economic risks. The over-reliance on algorithms may also lead to a lack of human judgment and intuition in decision-making processes, potentially overlooking critical factors and perpetuating economic vulnerabilities.

Inadequate decision-making

Lack of common sense

While AI systems excel at processing vast amounts of data and making reasoned decisions based on patterns, they often lack common sense reasoning and intuition. AI may struggle to interpret and understand situations that involve contextual knowledge or require common sense understanding. This limitation can result in AI systems making decisions that seem logical based on available information but fail to account for relevant nuances or practical considerations.

Inability to interpret information contextually

AI systems often operate within predefined parameters and rely on existing data patterns to make decisions. They may struggle to interpret and understand information contextually, leading to potentially flawed or biased conclusions. For example, an AI system tasked with predicting crime rates may rely on historical crime data without considering broader contextual factors such as socioeconomic conditions or changes in policing strategies. The lack of contextual understanding can lead to inaccurate analyses and ineffective decision-making.

Racial and gender biases

AI algorithms can inadvertently perpetuate and amplify racial and gender biases present in the data on which they are trained. If historical datasets are biased or if the algorithms are not developed with diversity and inclusivity in mind, AI systems can reinforce discriminatory practices and outcomes. For instance, facial recognition algorithms have been found to have higher error rates for individuals with darker skin tones, leading to potential racial biases in surveillance systems. Addressing these biases and ensuring fair and equitable AI algorithms is crucial to mitigate the negative impact on marginalized groups.

Errors resulting from over-reliance

Over-reliance on AI systems can lead to errors and mistakes that may have significant consequences. While AI technology can enhance decision-making processes, humans must not abdicate their responsibility to scrutinize and validate the outputs generated by AI systems. Blindly accepting AI-driven recommendations without critical evaluation or human judgment can lead to costly errors and missed opportunities. Striking a balance between AI assistance and human oversight is essential to ensure accurate decision-making and avoid detrimental outcomes.

Loss of human touch

Decreased empathy

AI lacks the ability to interpret and convey genuine empathy, a trait deeply rooted in human nature. While AI systems can simulate empathy to a certain extent, their responses are programmed and lack the emotional depth and understanding that human interaction offers. The loss of human touch, empathy, and emotional connection in various domains, such as healthcare or counseling, can have profound negative consequences on individuals’ well-being and satisfaction with services.

Reduced human interaction

The integration of AI into various aspects of daily life may lead to a reduction in human interaction. AI-powered technologies, such as virtual assistants or chatbots, may offer convenience and efficiency, but they cannot replace the richness and complexity of human interaction. Over-reliance on AI for social interactions can lead to fewer opportunities for meaningful connections and human bonding, potentially resulting in adverse psychological and emotional effects, such as increased feelings of loneliness and isolation.

Diminished emotional intelligence

Emotional intelligence, the ability to recognize and manage emotions in oneself and others, is a fundamental aspect of human interaction. However, AI systems, even those designed to recognize and respond to emotions, lack the inherent emotional intelligence that humans possess. In fields such as therapy or counseling, the absence of emotional intelligence in AI systems can limit their effectiveness and hinder the development of genuine therapeutic relationships based on mutual understanding and empathy.

Loss of personal connections

The widespread integration of AI in various domains can lead to a loss of personal connections. For example, the increasing use of AI-powered customer service chatbots may replace human customer service representatives. While chatbots may provide quick responses and resolve minor issues, they lack the personal touch and understanding that human representatives can offer. This loss of personal connections can impact individuals’ experience and satisfaction, leading to a sense of detachment and increased dissatisfaction with AI-mediated interactions.

Unpredictable consequences

Unforeseen ethical dilemmas

As AI technology advances, new and unanticipated ethical dilemmas may arise. The complexity of AI systems and their potential to shape various aspects of society can lead to unforeseen ethical challenges that require careful consideration and analysis. For instance, self-driving cars may face ethical dilemmas when they encounter situations where human lives are at risk, forcing the AI to make morally difficult decisions. Anticipating and addressing these challenges is crucial to ensure the responsible and ethical development and use of AI technology.

Negative impacts on societal norms

AI technology has the potential to shape and influence societal norms and values. However, the introduction of AI-driven applications and systems can also inadvertently challenge or undermine existing norms and values. For example, the use of AI in generating deepfake videos or spreading misinformation can erode trust in media and distort the perception of reality. Understanding the potential negative impacts on societal norms is essential to minimize harm and ensure the responsible integration of AI into society.

Unintentional consequences

The complexity of AI systems and their interactions with complex social systems can lead to unintended consequences. Unforeseen issues, biases, or unintended uses of AI technology may arise as it becomes integrated into various domains. For instance, bias in facial recognition algorithms could lead to false arrests or wrongful convictions. The potential unintended consequences of AI highlight the need for thorough testing and evaluation, ongoing monitoring, and the ability to adapt and address issues as they arise.

Challenges in regulation and governance

The rapid development and deployment of AI technology present significant challenges for regulatory frameworks and governance. Keeping pace with technological advancements and ensuring ethical and responsible use of AI requires robust and adaptable regulatory measures. Balancing innovation and the protection of individuals’ rights and societal well-being is a complex task. Developing clear guidelines and standards for the development, deployment, and use of AI technology is necessary to address potential risks and ensure accountability.

Limited understanding of humanity

Inability to comprehend human emotions

AI systems struggle to understand and interpret human emotions accurately. While AI can be trained to recognize facial expressions or speech patterns associated with certain emotions, it lacks the ability to truly comprehend or empathize with human emotions in a nuanced manner. This limitation prevents AI systems from fully grasping the complexities of human experiences, potentially leading to misinterpretation or misrepresentation of emotions, especially in contexts where emotional understanding is crucial, such as healthcare or mental health support.

Difficulty understanding cultural nuances

AI systems may have limitations in understanding and accommodating cultural nuances and differences. Language processing algorithms, for example, may struggle to accurately interpret slang, colloquialisms, or cultural references. This lack of understanding can lead to communication breakdowns, misunderstandings, or even reinforce cultural biases or stereotypes. Addressing the challenges of cultural understanding in AI systems is crucial to ensure inclusive and culturally sensitive interactions.

Inaccurate representation of human experiences

AI systems rely on training data to learn and make predictions or decisions. If the training data is limited or unrepresentative of the diversity of human experiences, AI systems can produce inaccurate or biased results. AI algorithms may fail to capture the full spectrum of human behaviors, preferences, or needs, leading to exclusionary or discriminatory outcomes. Ensuring diverse and inclusive training datasets is essential to minimize the risk of inaccurate representations of human experiences.

Challenge in decision-making for the greater good

AI systems struggle in making decisions that prioritize the greater good or act in a morally justifiable way. Challenges arise when defining what constitutes the greater good or determining the ethical principles to guide AI decision-making processes. AI systems lack the moral reasoning and subjective judgment necessary for complex ethical dilemmas. Addressing the challenge of aligning AI decision-making with societal values and the greater good requires careful ethical considerations and human guidance.

Erosion of job satisfaction

Repetitive and mundane tasks

AI automation can lead to job dissatisfaction when tasks become repetitive and mundane. Jobs that predominantly involve routine-based or monotonous tasks are at a higher risk of being automated, leaving workers with a reduced sense of fulfillment or accomplishment. The loss of challenging and intellectually stimulating work can erode job satisfaction and result in disengagement.

Lack of fulfillment

The replacement of human workers with AI systems may undermine individuals’ sense of purpose and fulfillment in their work. For many, job satisfaction comes from contributing their unique skills, creativity, and expertise to a meaningful endeavor. If AI systems take over tasks that were once performed by humans, individuals may feel disconnected from their work and experience a lack of fulfillment, leading to reduced job satisfaction and overall well-being.

Inability to showcase creativity

AI technology’s limitations in creativity and innovation can suppress individuals’ ability to showcase their own creative abilities in the workplace. Jobs that rely on creativity, artistic expression, or imaginative problem-solving may be less susceptible to AI automation. However, in industries where AI takes center stage, individuals may feel constrained or restricted in their ability to express and develop their creative potential. This inability to showcase creativity can decrease job satisfaction and hinder personal growth and development.

Decreased motivation and engagement

The threat of job displacement and the increasing role of AI in the workforce can negatively impact individuals’ motivation and engagement in their work. The uncertainty surrounding the future of work and the potential for job loss can create a sense of insecurity and demotivation. When individuals feel that their contributions are undervalued or easily replaceable, their motivation and engagement in their work are likely to decrease, leading to a decline in job satisfaction and overall productivity.

In conclusion, while AI technology offers numerous benefits and advancements in various fields, it is crucial to recognize and address its potential negative impacts. From job displacement and economic challenges to ethical concerns and threats to human existence, a comprehensive understanding of these issues is necessary to ensure the responsible development, deployment, and use of AI in society. Striking a balance between harnessing the potential of AI and mitigating its detrimental effects is key to creating a future where AI technology serves the best interests of humanity.